Achieving autonomous flight will occur gradually, as machines relieve pilots’ workload. The eventual goal will be approached stepwise: from relatively simple assistance for pilots via machine control with pilot supervision to stages of full autonomy.

In a major step forward, Daedalean, has been working with Leonardo Helicopters on a year-long flight-testing project, featured in this video:

Leonardo engineers put Daedalean's AI-enabled OmniX visual awareness suite through a rigorous set of trials, with the goal of demonstrating the performance of OmniX and establishing how it can be used to support situational awareness for a pilot or an unmanned system operator.

For Leonardo and Daedalean, the flight tests marked a step into new territory. For Leonardo, the project represents one of the first forays into practical, real world examples of how artificial intelligence can support fight operations. For Daedalean, it was an opportunity to demonstrate immediate application of its systems' ability to provide assistance to helicopter pilots in a progression toward developing a system for full autonomy.

At present, OmniX is an experimental demonstration system which provides users a chance to observe and test the functions soon to be coming to market. It comprises aircraft-mounted cameras, a computer, and an interface display. Current capabilities include identifying aerial traffic including birds and drones, determining location in GPS-denied environments, and offering landing guidance. Leonardo reported the test analysis yielded outstanding results.

Installation and Calibration

OmniX has been operated by numerous users on various aircraft types (fixed-wing and rotary), but as experimental equipment, it requires custom installation, needing comprehensive drawings and schematics of the host system, which include all components, electrical circuits, and power requirements. Leonardo engineers at the PZL-Swidnik facility in Lublin, Poland, where flight testing took place, painstakingly installed the Daedalean equipment onto, in this instance, the SW4 and SW4 Solo RUAS/OPH helicopters.

Both helicopters were equipped with the same setup to allow for flexibility in testing. The core computer was installed in the cargo compartment located behind the cabin. A steel cylindrical enclosure housing the cameras was mounted on the nose tip. An Android tablet allowed the flight test engineer, sitting alongside the pilot, to view in real time the system's performance. Data was also recorded on every flight for later analysis.

With the equipment installed, Leonardo's engineers conducted standard electromagnetic compatibility tests. While still safely on the ground, both helicopters' onboard OmniX systems were initiated to simulate the electromagnetic environment during typical flight operations. Engineers tested to see if the equipment would adversely affect the helicopter's onboard equipment, especially the ability to transmit and receive communications on frequencies used for air traffic control. It was gratifying to see that electromagnetic emissions from the system were well within acceptable limits and that no impact was observed on any of the helicopter's systems.

Visual Traffic Detection

The OmniX visual awareness system answers three essential questions: "Where can I fly?" "Where am I?" and "Where can I land?" The system that Leonardo spent the most time testing, the Visual Traffic Detection (VTD) system, answers this first question.

To avoid mid-air collisions, pilots have several sensory systems such as ADS-B, radar, and TCAS, but no systems are visually-based. Daedalean's approach to the avoidance of mid-air collisions is to return to the basics of flight – a pilot looking out the cockpit window. But unlike pilots' eyes, computers can look in many directions at once, see further, and are never distracted.

The goal of the tests was to assess how well the VTD can spot, track, and recognize aircraft and other traffic in its cameras' field of view during the day during Visual Meteorological Conditions.

The assessments were broken down into three distinct encounter scenarios in which two helicopters would approach each other: (1) head-on, (2) perpendicular to one another, and (3) one helicopter following another.

During the head-on tests, the two helicopters flew at 3000 and 3500 ft, respectively, switching between being below and above the horizon; typically, it's harder to pick out an aerial object that's below the horizon. Taking advantage of the SW4 being primarily white and the SW4 Solo RUAS/OPH being black, several combinations were used: white against the sky and black against the ground, etc., ensuring all possible scenarios were covered.

As expected, at high contrast combinations, the performance was ideal. Typically, the system recognized objects as far away as 2.5 nautical miles, according to the distance readings from OmniX. The system demonstrated exceptional ability at detecting traffic when the distance was below 2.5 nautical miles. This is notable when considering a helicopter approaching from the front is challenging to spot as its front surface presentation is much smaller than other aircraft, such as fixed-wing.

For the perpendicular test, one helicopter (the 'intruder') crossed the path of the other, flying from left to right. The altitude separation between the helicopters was about 500 ft, with both between 2500 ft and 3500 ft. The intruder flew above and below the horizon, and each helicopter took a turn as the intruder.

The system generally performed well in detecting passing helicopters, recognizing them as soon as they came within the camera's range. The performance was especially good below two nautical miles, as expected for the system design, and some detections occurred from as far away as three miles. Detecting aircraft against the sky was never an issue, while detection against the ground was usually reliable but depended on factors like ground illumination, the angle of the helicopter, and its color.

For the third encounter scenario, in which one helicopter trailed the other, the altitude difference between the two aircraft was somewhat larger, between 1,500 and 3,000 ft. The flight test team performed separate tests at three different distances: 0.4 to 0.5 nautical miles, 0.7 to 0.8 nautical miles, and 1.0 to 1.2 nautical miles. The tracking was continuous and consistent.

Overall, the effectiveness of the VTD in recognizing and tracking helicopter intruders varied depending on the conditions and surrounding environment. Most objects within 2.5 nautical miles (about 5 kilometers) were detected within two seconds, but high contrast between an object and the background made it easier to spot the object. A complex background dominated by the same color of the aircraft slightly increases the challenge. This is inevitable for a vision-based tool, including, of course, the human eye.

The advantage compared to other systems, is that Daedalean's systems don’t rely on any ground infrastructure, nor do they rely on other aircrafts' broadcast signals. That's important – in some countries, up to 80% of air traffic doesn't use ADS-B.

Visual Positioning System

The Visual Positioning System (VPS) acts as a backup to satellite-based navigation systems like GPS. The goal of testing the VPS was to demonstrate to the Leonardo team how VPS maintains location information – including GPS coordinates, altitude above ground, and speed – based only on visual landmarks.

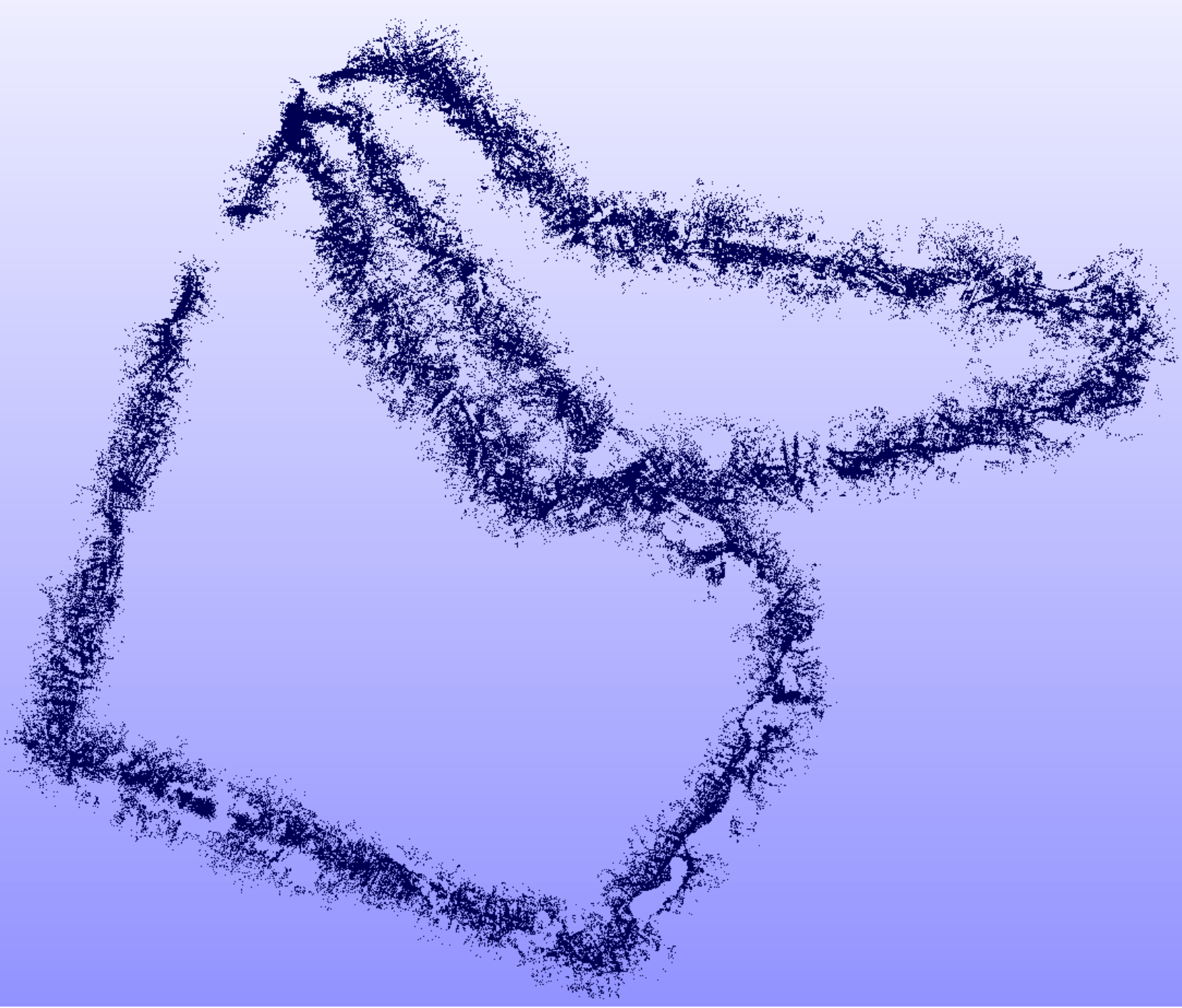

Before VPS can be used over a given area, the system must fly what are called "survey flights" to calibrate landscape features to GPS coordinates. The VPS records the landscape through its downward-facing fisheye-lens camera, and the onboard computer marks hundreds of thousands of features across the landscape. If GPS were to go down, the VPS could use those landscape feature marks to determine location by comparing them to the pre-calibrated GPS coordinates. The more ground features marked, the better the system works.

But what if a helicopter is flying over a bland, featureless landscape? Could the VPS still perform well? Leonardo Helicopter's engineers sought to find out.

Engineers decided to fly two routes. First, they flew an optimal route aimed at showcasing the nominal performance of the system. It included landscapes with a large number of physical details and landmarks such as buildings, roads, high voltage power lines, and distinctively shaped areas of farmlands and woods. The second route explored the system’s limits. The helicopter flew over areas where the terrain lacked easily determined features, such as areas with large forests, farms, and lakes.

In both cases, the system performed well, but the tests went further.

Leonardo’s engineers looked at how the system would perform if the helicopter left the surveyed areas. They wanted to see if the system could maintain location information outside pre-mapped areas. What they determined was that the accuracy of the reported position slowly dropped, but when the aircraft returned to the surveyed area, the correct position was immediately restored.

While these results verify the value of VPS in a world where GPS is both heavily relied upon and is unfortunately fragile, it also points to the necessity of extensive surveying by every user of the VPS.

However, Daedalean engineers are developing future generation systems that will not require surveys by individual users. Instead, third-party imagery will be used as reference, such as satellite images or data gathered from survey flights flown by other aircraft.

Visual Landing System

The most dangerous part of flying is landing. Daedalean’s Vertical Visual Landing System (VVLS) aids helicopter pilots to achieve a safe landing. Leonardo engineers tested the VVLS by performing landing maneuvers on a two-meter square marker similar to a QR code (called an AprilTag, specifically developed for computer vision by University of Michigan) staked to the ground. The pilot made horizontal approaches at altitudes ranging from 50 to 15 feet. He also made approaches at a 45-degree angle, descending through altitudes ranging from 50 to 5 feet. The task of the VVLS was to recognize the precise location and orientation of the landing site using the downward-facing camera and to provide guidance to the pilot via the tablet interface during landing maneuvers.

To test the VVLS, the flight test engineer assessed the system’s suggested maneuvers in real-time by monitoring the tablet interface. This displays a live video feed from the downward-facing camera, a crosshatch calibrated to the ground marker, altitude above the marker, and directions for positional adjustments.

The flight engineers reported that they found the system useful, commenting that it could be a very effective tool, for example, in suspended load operations for conventional pilot-controlled helicopters. They noted it would be especially effective during a high hover when visibility under the helicopter is very limited, and for landings in confined spaces such as aboard small ships. In addition, they suggested that the VPS could even serve as the primary source of information for lightweight drones tasked with carrying shipments in urban environments.

Looking forward

Approximately 40 flight hours were conducted, divided between preparatory process and evaluation. Out of more than 50 encounters, OmniX successfully detected the presence of other aircraft more than 90% of the time. Working with highly respected OEM Leonardo Helicopters allowed Daedalean to demonstrate the viability of an AI-enabled system, such as OmniX, to provide immediate pilot assistance as a significant step towards achieving full flight autonomy.

Co-funded by the Innosuisse – Swiss Innovation Agency.

Eurostars project number: E!69 EST-LHD-DDLN