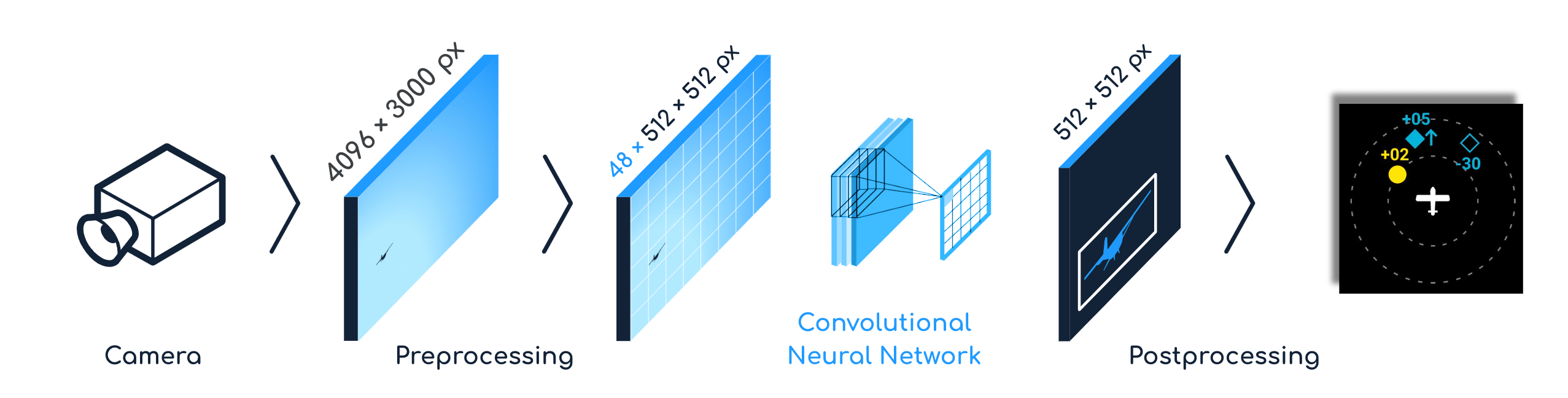

Daedalean’s AI-enabled visual awareness system is designed to detect aerial traffic such as aircraft, birds, drones – anything that flies. It relies on input from cameras installed on a user’s aircraft. The images captured by the cameras are then processed by our algorithms, which run in real time on the onboard computer. These are computer vision applications based on neural networks.

The performance of the visual systems depends on, among other factors, the quality of the images that the neural networks receive from the cameras. An aircraft several nautical miles away covers only a couple of pixels on the image from the camera. To make sense of that image, you need these pixels to be very sharp and the colors very precise.

When Daedalean was founded, the initial plan was to focus on creating certifiable machine learning-based applications for civil aviation as a software company. Then we found that the necessary hardware – performant, compact, lightweight, energy-efficient, durable to withstand harsh environmental conditions, and, most importantly, certifiable for civil aviation – simply didn't exist. So, we needed to design it ourselves. You can check our previous posts about our other ventures into computing hardware design: FPGAs and a tensor accelerator.

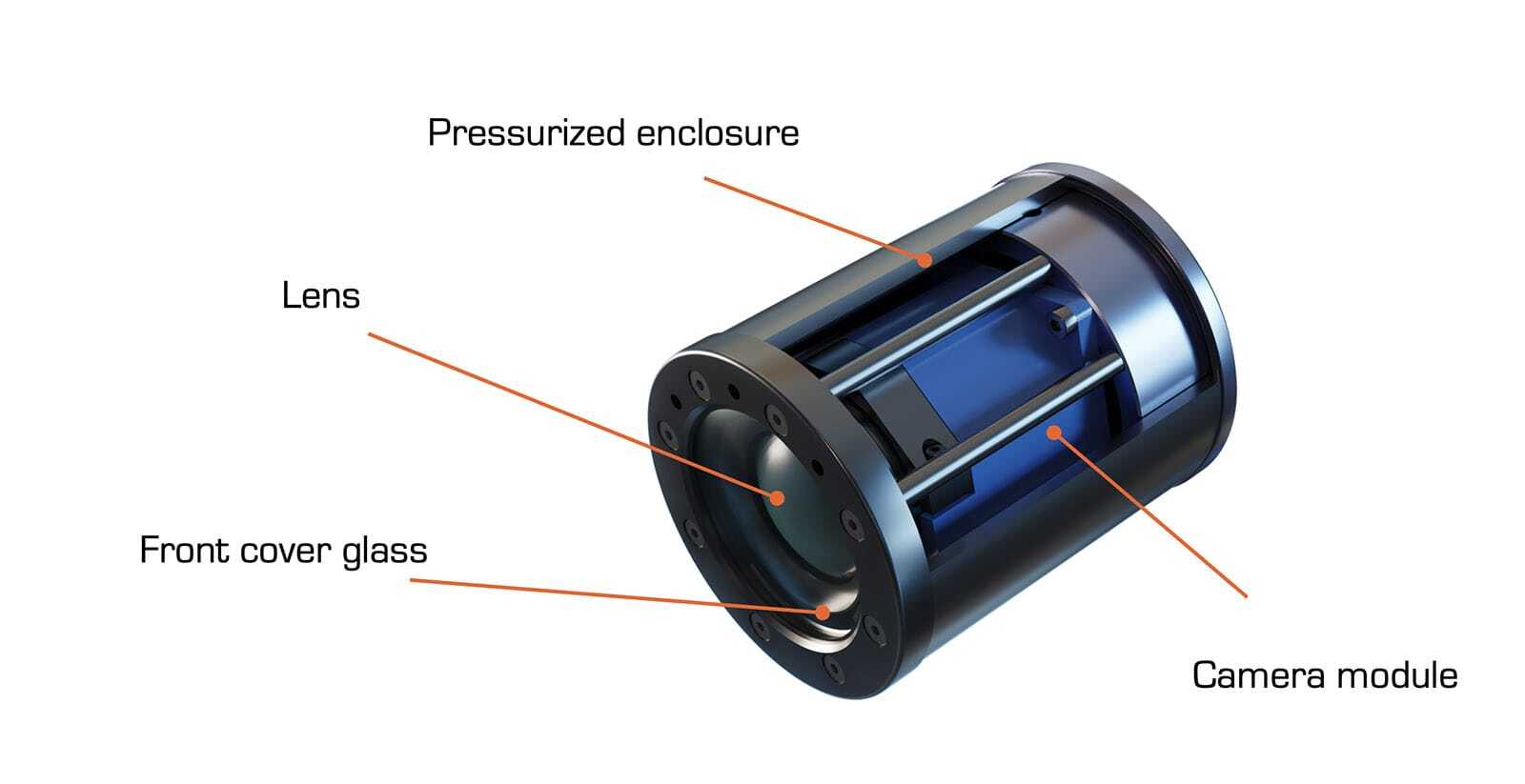

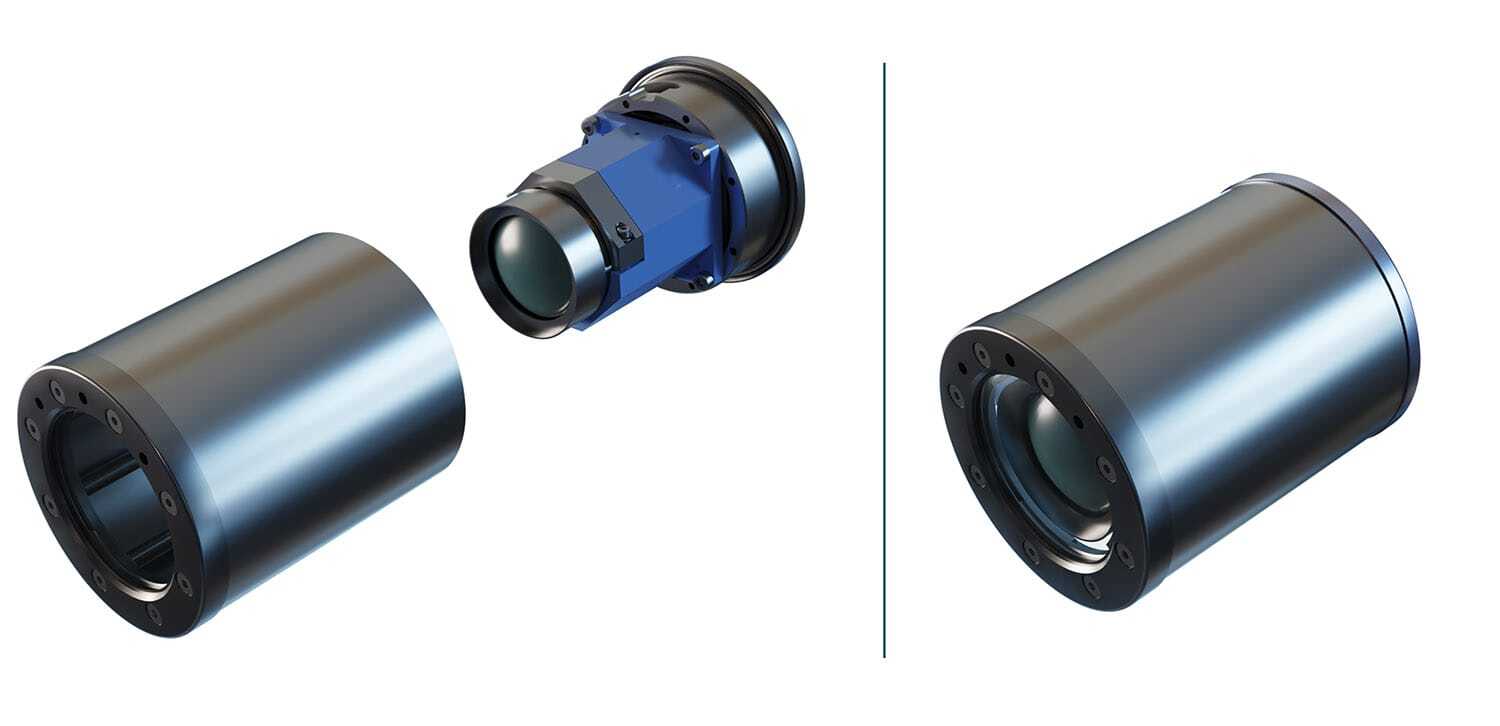

But the same prerequisites apply to cameras – or, as we call it with an internally coined acronym, VCUs, which stands for Visual Capture Units. VCUs are more than just cameras, as they consist of an image sensor, a data processing unit for capturing and processing high-resolution images, and multiple components that help to ensure the quality and reliability of data under challenging environmental conditions.

Based on our use cases for machine learning, particularly our visual traffic detection system, we can now specify the requirements and offer the reference architecture for aviation-grade computer vision applications – a problem statement that this market had yet to solve. Until now.

During this project, we worked with several companies on an iterative basis to test and validate a range of solutions. As a result, we now have unique hardware assembled for components you can’t buy anywhere else: they are exclusive and created specifically for us.

Requirements

We looked at state-of-the-art technology, researched use cases, and formulated our functional performance requirements.

It was simple, really, and very logical:

- The optical performance should be aligned with our needs, such as detecting aircraft at a distance of 5 miles. For that, we have calculated the size of the smallest targets our neural network could detect at a specific distance, both in real-life measurements and in pixel size.

- Then there's the certification: the VCU development process must comply with the DO-254 standard for hardware (cameras and lenses) and the DO-178B standard for software (image processing).

- Then, environmental requirements (environmental testing under the DO-160G standard) to ensure the protection of the camera module against air or moisture intrusion, as well as resilience to temperature, vibration, and lightning strikes.

- Finally, the entire module should be efficient in terms of size, weight, and power consumption.

As we defined these requirements, we also reviewed what equipment was available on the market. The various tradeoffs started to sound like a classic joke: “Good, Fast, Cheap: You can only pick two.”

There are cameras off-the-shelf for the aerospace market: robust, lightweight, and certified. However, they are typically used for video shoots or providing pilots with direct video streams. The quality of these visuals may look good to the human eye, but for computer vision systems, it is decidedly insufficient. Neural networks require raw image data; they find patterns in pixel-level details and color aberrations so tiny they are not visible to the human eye.

There are other cameras, made especially for computer vision applications, which offer perfect resolution and contrast. However, these are usually intended for industrial applications (like drone inspections of interior spaces). Their lenses have moving parts, are not properly sealed, and are not designed to withstand the extreme temperatures and vibrations of aviation levels.

Finally, there are satellite cameras: Good resolution and contrast and robust enough to withstand harsh conditions in space. But their size, weight, and power far exceed aviation limits.

No one camera could satisfy all the requirements. A completely new camera would need to be created.

Partners

It's really important to find companies to work with who are willing to take on something challenging and invest effort and time. They are more than just suppliers. They are partners.

For the camera electronics, we found KAYA Instruments, which offered a tiny, rugged, and low-power camera. They implemented a small prototyping project that was an instant success, and we continued to work together on the design and development of the VCU. From the beginning, KAYA was very flexible, open, and happy to do this project with us.

For the lens, we worked with Thorlabs. We expected high professionalism from the company with such a significant experience, and yes, Thorlabs lenses performed much better than the others – but how happy we were also to find that their attitude emphasizes responsiveness, agile design process, and engagement!

For the glass cover, Dontech, a developer of custom optical solutions, was an ideal partner to work with. Their cutting-edge technology of manufacturing precision coatings is combined with the high professionalism of their engineering and sales teams, who are quite good in communication and responses and have the capability to meet our highest requirements.

During this project, we learned how important it is to find the right partners to help us bring a customized design to fruition. Those we worked with truly partnered with us instead of taking on simply the role of supplier to a customer. That resulted in quite a productive relationship and a product that fits our needs.

A separate challenge was the compatibility of the components: When they are developed by several different companies, we need to ensure they work well together. The interface between the camera and the lens is crucial, as improper assembly and focusing can ruin optical performance.

Camera Module (KAYA Instruments)

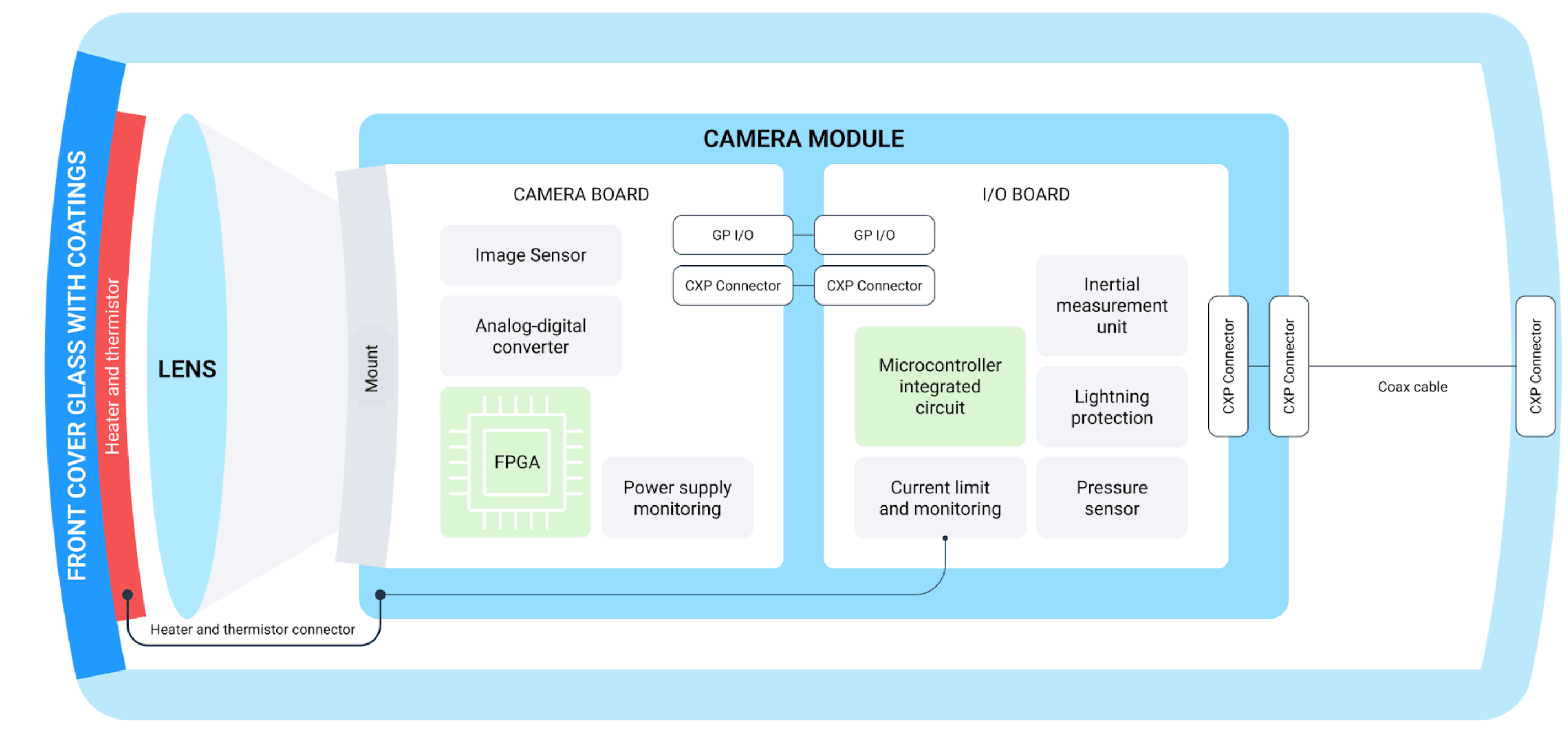

Inside every VCU, we have a camera module with two main circuit boards: the camera board and the input-output (I/O) board.

- The camera board creates frames – digital images consisting of pixels that the neural network can analyze – from analog images captured by the sensor.

- The I/O board handles data coming into and going out of the module. It makes the camera airworthy by adjusting the data to compensate for the influence of various environmental factors. For this, it uses several components: IMU (inertial measurement unit) sensors for attributing the orientation and altitude data for every image, sensors for internal temperature, humidity, and pressure, and components for lightning protection and power management.

Lens (Thorlabs)

The development of the VCU was parallel with the development of the entire Daedalean’s visual awareness suite, and we had been testing the suite performance before we had the custom-designed cameras and lenses. So, at first, we used off-the-shelf lenses and cameras whose optical performance was good enough to start collecting data and training the neural network. Seeking to specify what exact technical parameters the system cameras needed, we conducted a lot of tests to validate our assumptions.

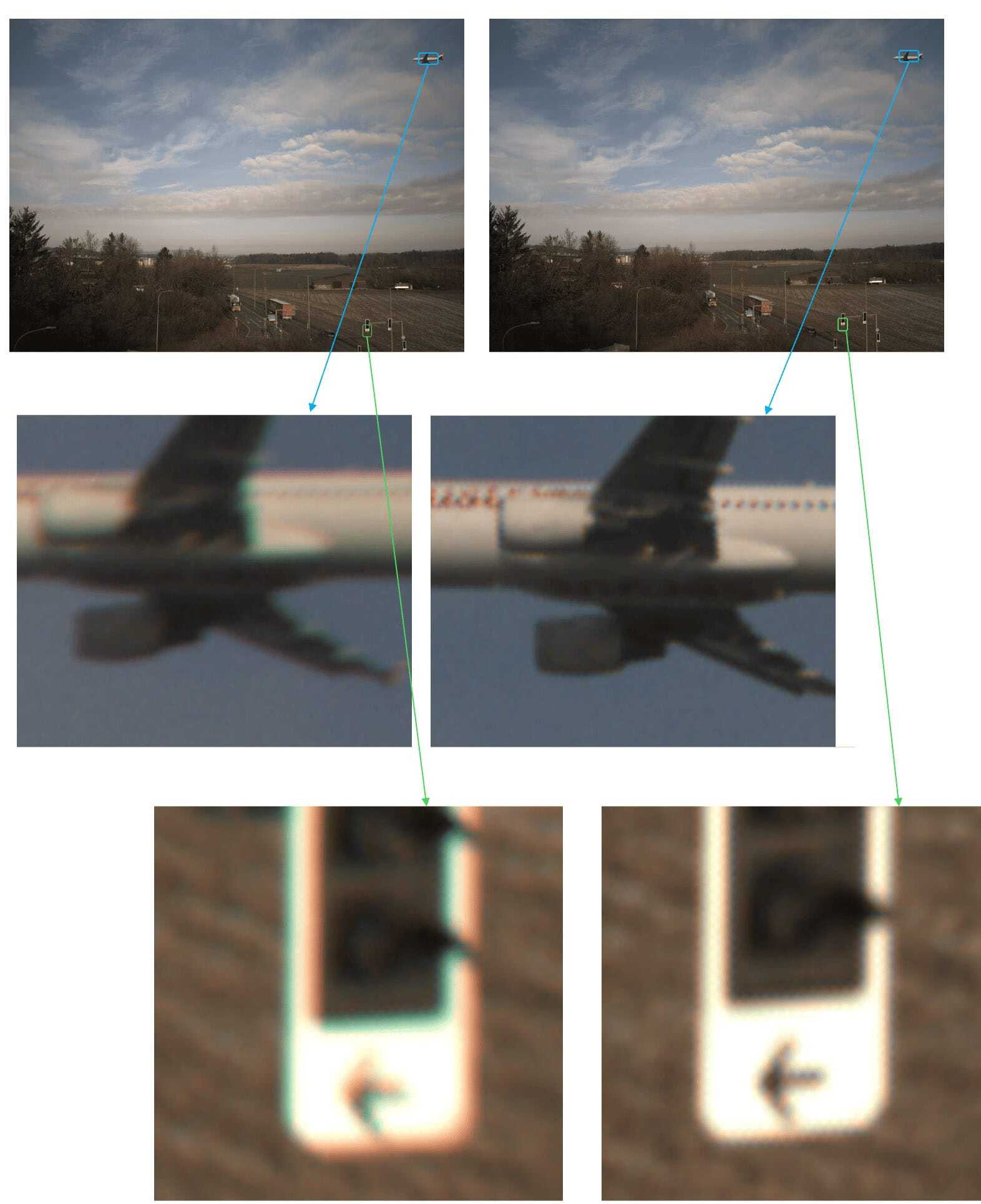

We calculated the size of the smallest targets that our neural network could detect at a specific distance, both in real-life measurements and in pixel size. For example, a Cessna 172 at a distance of 1850 meters occupies 5.5 pixels on the image from a 12Mpix/12mm camera. We conducted numerous optical feasibility studies to establish optical requirements for both side and forward lenses across a huge variety of aircraft. We evaluated a number of lenses in the lab and real flight testing before we decided on the final requirements.

Last year, Thorlabs delivered the first lenses to us. Initially, we had sought lenses able to withstand the temperature range required by the DO-160 standard and didn’t emphasize the image quality requirements. But it turned out that having the lenses custom-designed also led to image improvements that exceeded our expectations. The static images below show a clear difference. On the left is an image taken with the commercially available off-the-shelf (COTS) lenses we previously used, and on the right is an image captured by the Thorlabs lens.

The better contrast, definition, and detail offered by the Thorlabs lens were made possible by specifying the requirements about various parameters, for example, transmission (defines how much light gets through without loss) or Modulation Transfer Function (MTF), which measures how much blur and aberration are introduced throughout the path of light from the lens to the image sensor and then to the digital image. Designing specific requirements was crucial for balancing the trade-offs between high image quality and the size and complexity of the lens. MTF, resolution, contrast, transmission, and field of view are all key parameters for our functionality.

As a result, the level of detail in the images captured by our new lens is impressive not only in the center, where most lenses perform well, but also at the edges. Achieving a high level of detail across the whole field is critical for our purposes: we need it to ensure that the system can keep pilots and aircraft safe no matter where the threat appears. Due to the improvements in the image quality, we have increased the detection range, enabling the system to detect and recognize an air hazard and warn a pilot earlier.

An important advantage of having a customer-developed solution is the precise optical performance information we now have. Sometimes, when dealing with an off-the-shelf component, a customer only knows as much about it as the supplier chooses to disclose, and they don’t commit to informing their customers if they change something like the material or components inside the product, so the information cannot be considered reliable or complete. With Thorlabs, it’s not the case. They have very strong requirements around any changes, even for their off-the-shelf components: as they told us, this includes notification to customers buying multiple units. And it’s needless to say that for our custom lens assembly, we enjoy being in control of all the design parameters (such as MTF, transmission, FOV, etc.) for each production item and having the guarantee that it is built as designed.

Casing

We put the VCU in a pressurized enclosure that we developed together with KAYA. The enclosure protects the VCU from moisture and salt, as well as manages temperature. It is filled with nitrogen and has a pressure valve and seals. At the rear, there is a hermetic adapter. On the front side facing the lens is a glass cover created by Dontech with multiple layers providing several functions, including grounding, hydrophobic resistance, anti-reflective properties, a heater, and a thermistor to prevent external ice and moisture buildup.

Certification

The VCU is included as a component in two of Daedalean’s visual traffic awareness systems: the one for Part 23 aircraft (PilotEye) and the other for Part 27 (Ailumina Vista), both to be certified to DAL-C. Currently, we are undergoing STC projects for them with the FAA and EASA. The project with EASA is also a part of the process of Daedalean becoming an EASA-approved Design Organization (Part 21J).

Within these two projects, we are working on the tests and the documentation that will demonstrate evidence that the products comply with DO-254 for the hardware and DO-178C for the software.

The VCU is a significant subproject in both cases. We are assessing prototypes for performance and preparing the setup for running tests for vibration, temperature, lightning strikes, salt spray, and the entire set of required tests according to DO-160. We face a dynamic set of questions: Will our chosen sealing and pressurizing methods be sufficient? How will our sensors behave under extreme temperatures? Have we correctly assumed the tolerances between components, such as lens, camera, and enclosure, manufactured by different suppliers?

We have a meticulous design process. It is hardware, electronics, software, and optics all together, and we need to do extensive calibration and assembly to ensure that it works as we want. We perform design reviews that follow our standard procedures and guidelines. But we also test prototypes as early as possible to minimize risks along the way towards final design and verification.