Keynote speech for the European Cockpit Association 2021-11-26 in Brussels on the occasion of their 30th anniversary.

Dr. Luuk van Dijk, Founder & CEO Daedalean

Thank you very much for giving me the opportunity to address such a distinguished audience today.

In 2016 I founded Daedalean to create an autopilot that "outperforms human pilots on every measurable dimension". Understandably, when I tell people, reactions are mixed. The critical ones are roughly in three categories:

- "We're already doing that, planes already fly themselves", which you know very well isn't true,

- "That is never going to work", which is entirely up to me to disprove,

- and finally, "you shouldn't want this", which does raise a fundamental question indeed.

What problem are we trying to solve? Flying is already safe; people are happily paying their tickets from a to b through the air, you all have a great job, airlines are profitable – why bother, why look for new human sacrifices on the altar of progress?

Flying is pretty safe. In 2017 zero commercial airliners on scheduled flights crashed. While scheduled commercial Air Transport is generally very safe, the picture becomes a bit grimmer in the rest of aviation, e.g. the privately flown Part 23 category, where the probability of dying per hour is comparable to riding a motorcycle. Flying is not very profitable, at least for the operators, but it is profitable enough that capitalism sees free agents choose to operate airlines. But there's a third dimension: the volume of operations. Airspace use is at capacity, with shortages of pilots and traffic controllers. The system-as-is is only working because Air Traffic Control tells everyone in controlled airspace to stay in their cubic nautical miles after filing detailed routing plans hours upfront.

People have ambitious plans of more intensive use of the airspace, specifically by Urban and Regional passenger and cargo transport. There are over 300 such projects listed on evtol.news, for example.

Now over half of those consist of a few people in a garage with no idea how many hundreds of millions it's going to cost to get an airframe certified. Then some of the ones that have raised those hundreds of millions are apparent investor scams that will never fly commercially and will cost some people some severe reputational damage. Nevertheless, I believe a few are definitely going to get to the operational stage in a couple of years. There is no denying that the opportunities of electrification mean simplification and, therefore, opportunities for lower cost and higher safety.

To fly air taxis or smaller cargo transports at a higher density at an equal or better level of safety, with IFR and separation, with human-human pilot-ATC communications over a voice channel, the system today can tolerate maybe 10 or 20 aircraft over a large city. Irrespective of the airframes and operations, commercial air transport is a sector that has demand and wants to grow.

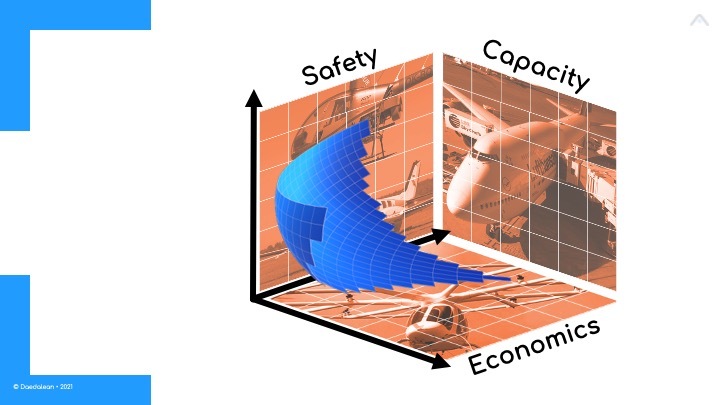

Around the time the Wright brothers took off, Vilfredo Federico Damaso Pareto came up with a way of thinking about such a multi-objective optimisation where there are multiple dimensions that compete with each other on what is called the Pareto front: the set of accessible points in the state space where you can not improve on one variable without making at least one other variable worse.

We can't make flying cheaper or increase the traffic density without making it less safe. We can't make it safer without making it more expensive or blocking off more airspace. There is a shortage of pilots, people do want to do more economic activity in the air, nobody wants to compromise the safety, so how do we move the Pareto front forward instead of being constrained to that surface of trade-offs?

To move the Pareto front without breaking everything, we need to apply some understanding of the Real World to engineer fundamentally better systems.

This is where Artificial Intelligence enters the picture.

Artificial Intelligence really is a marketing term. It has always been the thing we can almost – but not quite – do in computer science. In the 1950's it was a computer playing chess. It turns out that it wasn't actually that hard compared to the problem of answering the question: "is there a cat in this video?". Or "in this picture, where is the runway, the aircraft or the pedestrian?". But over the last decade, some of these things have become possible, thanks to enormous advances in computing power and the availability of so-called Big Data.

An in-depth review of the technology is out of scope for this talk, but what certainly does not help make the expectations more realistic is talking about it in anthropomorphised terms. The computer "learns", it "wants" to do things. What if it "wants" things it should not want or "learns" forbidden knowledge? Will we all be chased out of paradise by the new overlords we've created ourselves? Do we even understand how they work? Well, yes, we do, actually. There are some misconceptions there that would warrant a separate talk some other time. For now, I want to concentrate on the new opportunities.

Your definition of AI is as good as any, but "Creating machines that use some form of 'understanding' to achieve some effect in the world" is a good way of phrasing it. By that definition, James Watt's centrifugal governor, which set off the industrial revolution by stopping steam engines from exploding all the time, was a prime example of something that was barely imaginable once, that became established engineering practice overnight.

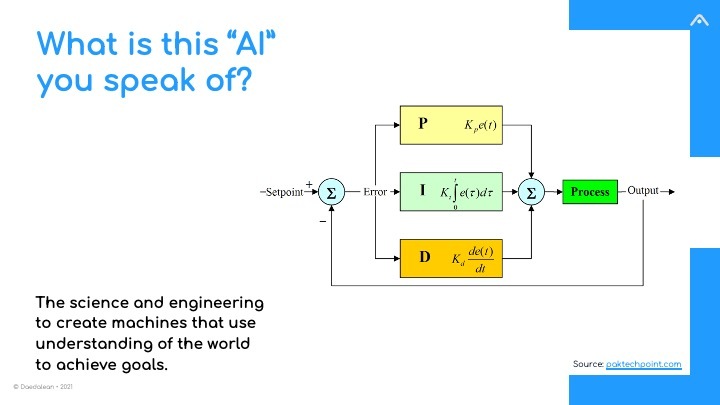

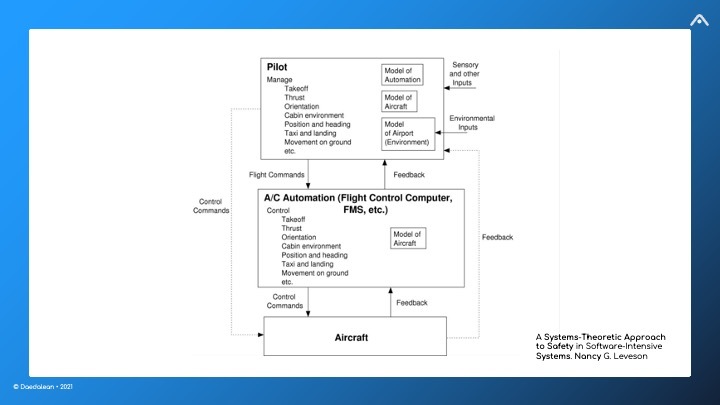

Today we recognise that governor as an instance of a PID-controller feedback loop, which is the prototype of almost every automatic control system that engineers build. Nearly every control for a dynamical system is engineered from high-speed inner feedback loops for which the setpoints and parameters may be set by outer feedback loops – which in turn are controlled by further loops working on increasingly larger temporal and spatial scales.

In a sense, what we called AI only yesterday, today are the new technologies that make it possible to create new types of control systems that were out of reach only a decade ago...

Before we go there, let's jump back 120 years: the Wright brothers' breakthrough depended on a truly interesting insight. Everyone was trying to build inherently stable aircraft that turned out to be uncontrollable in gusts: as former bicycle makers, they took the radical step of making the aircraft less stable but thereby more agile, and relying on additional control by the human pilot to control the unstable vehicle.

Since then, civil aviation has evolved for 120 years based on the human in control.

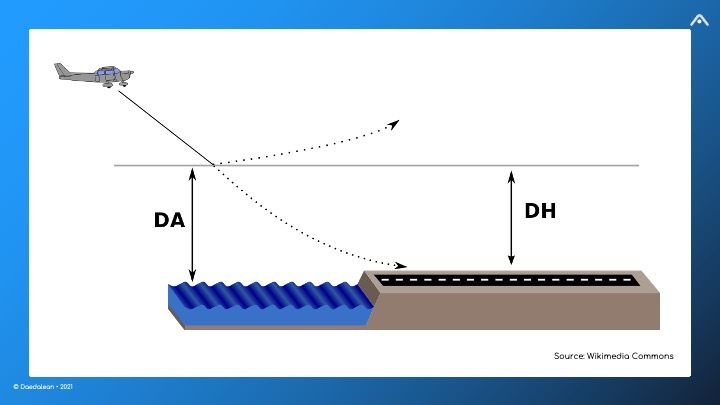

With all the increased instrumentation in the cockpit (and on the ground), flying has undeniably become safer. Yet, even today, this small aircraft from 80 years ago with almost no instruments can be flown safely and legally by anyone with a pair of working eyes, a functioning visual cortex, a PPL and a type rating. At the same time, today, no instrument can safely and legally operate an aircraft from take-off to landing.

The only conclusion we can draw from this is that every instrument in the cockpit is there to help human pilots fly safer, but every instrument is optional. There's something that the pilot, and currently only the human pilot, does. That something is: to deal with uncertainty. Or to use different words to say the same: to manage risk.

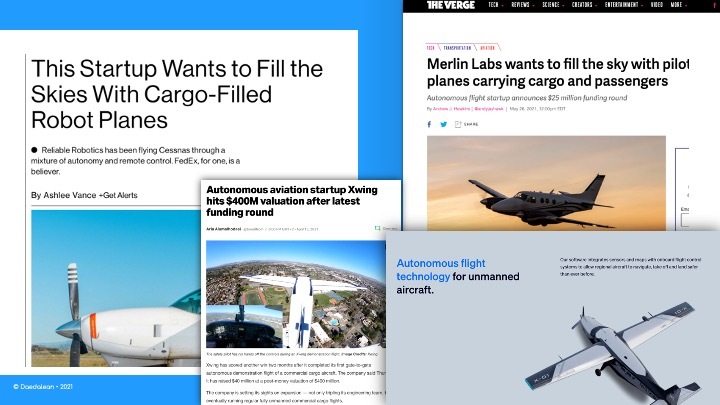

Ambitious and well-funded startups are pushing the limits of safe automation. However, the FAA won't let them fly without a safety pilot on board just yet.

You can come a long way and build a valid new business model by making better automation, and you can fill the remaining gap by operational constraints like fixing routes, restricting to good weather and providing ample radar coverage from the ground.

But if we try to completely replace the pilot today with instruments that ‘push some buttons so the pilot doesn't have to’, and call it ‘simplified vehicle operations’ we will not get to a state where we can remove the pilot, because pushing the buttons was never his (or her) job.

The new instrument will just require a more skilled human to deal with inevitable failure modes, where it either fails to deal with a hazard or introduces a new hazard itself. We may inadvertently make things worse because the whole control loop becomes less robust and too complex to analyse.

In that sense, initiatives based on “business-as-usual” avionics systems for simplifying vehicle operation are misguided. We cannot get rid of the requirement for a human on board by simply adding more automation.

Now suppose we want to do this, remove the pilot – you can still disagree with me on if we want to, but for now, bear with me – how do we break through this paradox?

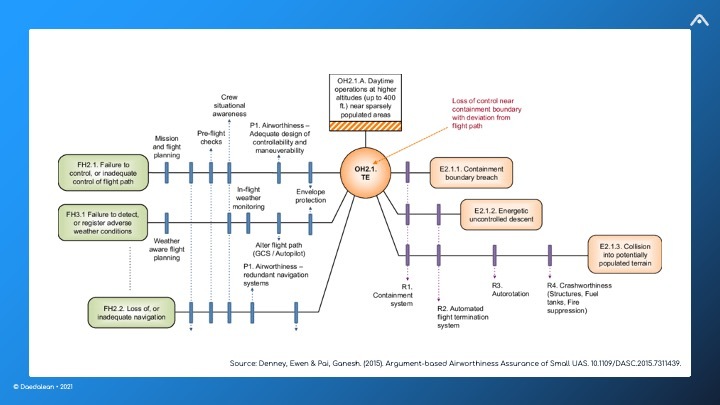

Look at hazard analysis and accident investigations: they are based on a static view of the world in which there is a chain of events that leads to an accident. Safety assurance is seen as making sure none of these chains can happen. When it comes to an accident investigation, this model looks for a so-called root cause, which – surprise surprise – is often the human.

Indeed when I explain the roadmap of Daedalean, I often get the question, “but, without a human pilot on board, who will bear the blame/legal responsibility if something happens?”

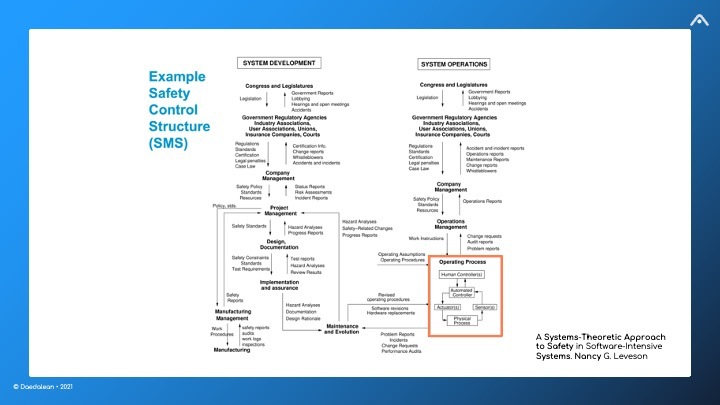

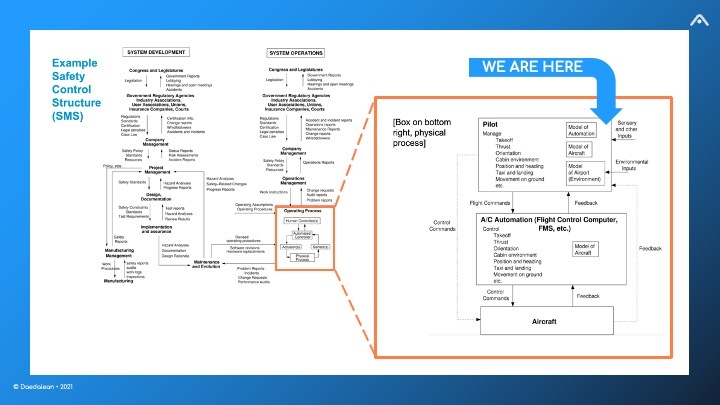

Prof. Nancy Leveson of MIT and her colleagues had great insight: maintaining a safe state is also a control problem and not just a matter of guaranteeing ahead of time that catastrophic chains of events cannot happen.

For this, we have to consider a hierarchy of control loops, each of which tries to maintain safety at different timescales; and we have to look at the human pilot controlling the systems that control the aircraft in the context of even larger control loops.

A consequence of this view is: human operator error, pilot error … etc. are evidence of a poorly designed system. Rather than looking for someone to blame, we should ask ourselves, “why did the human think that the actions he took would be the right ones?” and “how did we ever design the systems like that?”

With this, we can now gain the perspective to design systems that take over that risk management rather than automate pushing the buttons.

When we talk about “modern AI” today, the kind that has only recently become possible, we mostly mean Machine Learning.

Machine Learning is a set of techniques that have grown increasingly powerful. Things that until recently were hard, like the cat in the picture, have now become feasible.

Machine Learning is based on statistics, which in turn is founded on probability theory. It turns out that if you want to build systems that can deal with uncertainty, you have to not try to make things that are composed of components each of which can (almost) certainly never fail. Instead, you have to take components that have a non-zero failure probability and build them into composite systems that can deal with that failure rate.

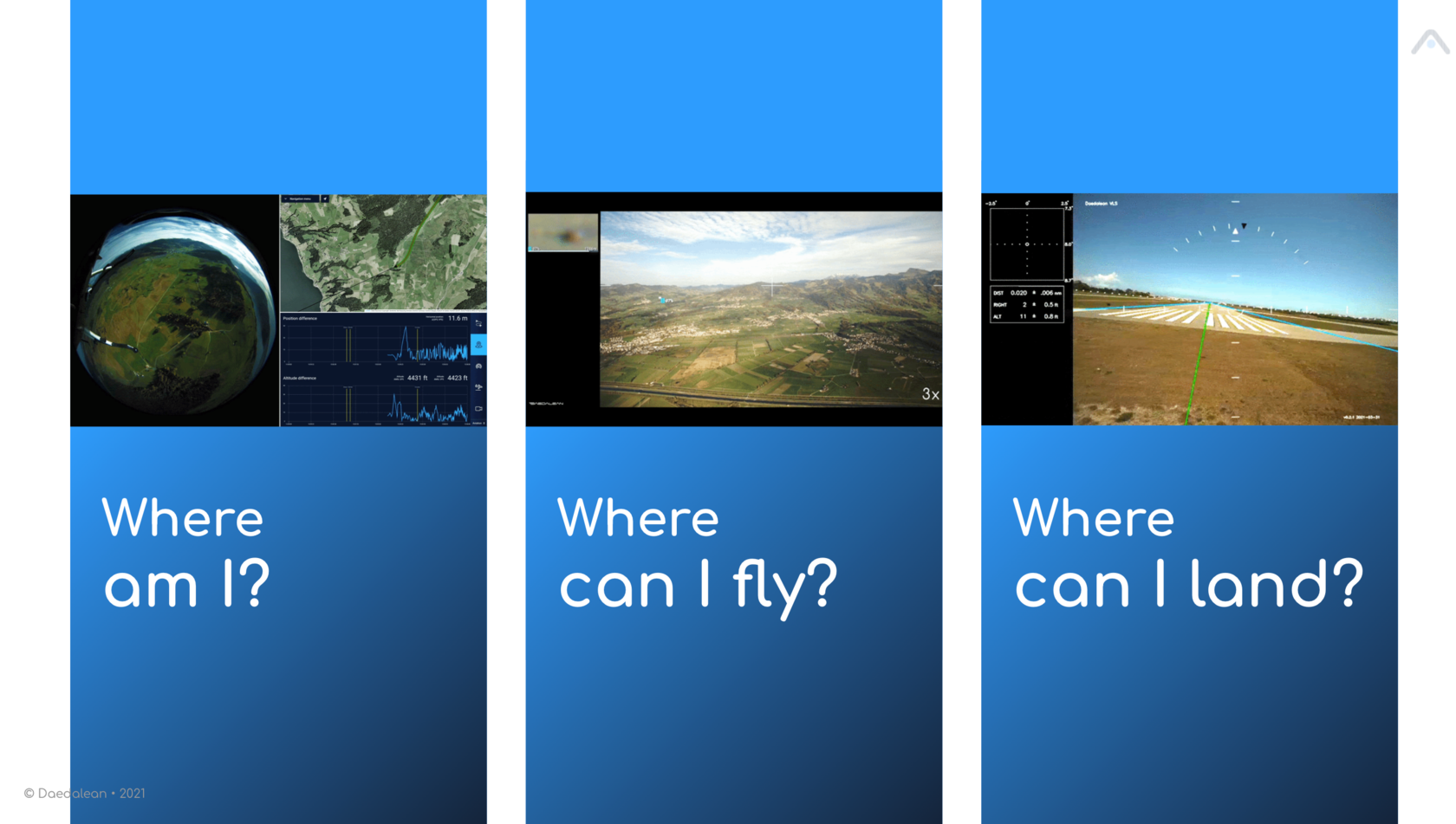

At Daedalean, we have built a system that uses nothing but visual input, just like the human pilot in that almost-uninstrumented aircraft from 80 years ago, to

- see where you are without GPS or radio or inertial navigation

- see where you can fly without ADS-B or RADAR or ATC

- see where you can land without ILS or PAPI

Why are these systems different from ‘classical’ avionics? They have to deal with possibly large uncertainties in the input, of the kind that until now was delegated wholesale to the human.

When the computer looks at a single image, it has a failure probability of identifying position, intruder or runway wrongly with, say 2% or even 4%, which in terms of traditional safety and software reliability analysis thinking is atrocious. But we can fix this by constructing the system such that this 4% gets raised to sufficiently high power. At the system level, the probability of failure is in the 10^7 or 10^9 per-hour range that aerospace engineers are comfortable with.

Using this technology, we can make, for example, a system that can make an ‘abort landing/go around’ decision, or able to decide that something is sufficiently likely to be a hazard that it is safer to take the risk to change course.

Like the Wright brothers, giving up the goal to reach certainty at a lower level – and instead of that putting a control system on top to deal with that – opens up the design space to include a set of solutions that were unthinkable before.

And with this, we have clearly moved up a level in the control loop hierarchy, and we can now start building control systems that dynamically maintain safety and balance risks, hitherto clearly the exclusive domain of the members of your organisation.

There is no reason to believe computers will always be worse at that than you are. There is no reason the machine can’t reliably make the call to land in the Hudson when all engines are out and to do so in adversarial conditions safely.

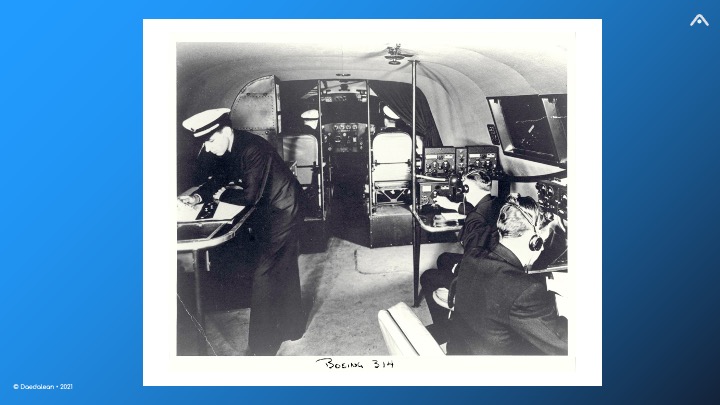

Take a look at this picture of a cockpit from 70 years ago. You’d be amazed to find this many people waiting for you in your cockpit today. Your board engineer, navigator and radio operator have found meaningful careers off-board, and flying is actually safer. Change happens. But the arguments to change must be based on facts, not opinions. Some of these changes, e.g. to go from three to two crew, were fought on facts, and when hard data showed two was actually safer than three, the change happened.

Now, it is my personal opinion (for which I do not have the data yet) that single-pilot operations (SiPO, eMCO) will turn out to be harder, less safe than going directly all the way to full autonomy on board. The technology to make that possible and the related rules do not exist yet, but they are being worked on. Give it a decade, and I think these systems will exist, along with the data to prove that they are safer, cheaper and can fly at higher traffic densities. (If that data does not materialise, it would be industry-wide criminal negligence to force the change.)

However, that does not mean human pilots will be out of a job. With the increased volumes, higher safety and increased economic incentives, it means that the job will change. With the decisions on the timescales of minutes and hours safely delegated to a machine, the human pilots can manage risk more effectively on larger scales – and perhaps gradually off-board. Like in many professions, yours will be a different job over time, but there won’t be a reason not to have a big party in 10 years at the 40th anniversary of your organisation.

I thank you for your attention.